Using search to provide a news archive in SharePoint 2010 is a wellknown solution. Just add the core results web-part to a page and configure it to query for your news article content type and sort it in descending order. Then customize the result XSLT to tune the content and layout of the news excerpts to look like a new archive. Add also the search box, the search refiners and the results paging web-parts and you have a functional news archive in no time.

This post is about providing contextual navigation by adding "<< previous", "next >>" and "result" links to the article pages, to allow users to explore the result set in a step-by-step manner. Norwegians will reckognize this way of exploring results from

finn.no.

For a user or visitor to be able to navigate the results, the result set must be cached per user. The search results are in XML format, and it contains a sequential id and the URL for each hit. This allows the navigation control to use XPath to locate the current result by id, and get the URLs for the previous and next results. The user query must also be cached so that clicking the "result" link will show the expected search results.

Override the CoreResultsWebPart as shown in my

Getting Elevated Search Results in SharePoint 2010 post to add per-user caching of the search results. If your site allows for anonymous visitors, you need to decide on how to keep tab on them. In the code I've used the requestor IP address, which is not 100% foolproof, but this allows me to avoid using cookies for now.

namespace Puzzlepart.SharePoint.Presentation

{

[ToolboxItemAttribute(false)]

public class NewsArchiveCoreResultsWebPart : CoreResultsWebPart

{

public static readonly string ScopeNewsArticles

= "Scope=\"News Archive\"";

private static readonly string CacheKeyResultsXmlDocument

= "Puzzlepart_CoreResults_XmlDocument_User:";

private static readonly string CacheKeyUserQueryString

= "Puzzlepart_CoreResults_UserQuery_User:";

private int _cacheUserQueryTimeMinutes = 720;

private int _cacheUserResultsTimeMinutes = 30;

protected override void CreateChildControls()

{

try

{

base.CreateChildControls();

}

catch (Exception ex)

{

var error = SharePointUtilities.CreateErrorLabel(ex);

Controls.Add(error);

}

}

protected override XPathNavigator GetXPathNavigator(string viewPath)

{

//return base.GetXPathNavigator(viewPath);

SetCachedUserQuery();

XmlDocument xmlDocument = GetXmlDocumentResults();

SetCachedResults(xmlDocument);

XPathNavigator xPathNavigator = xmlDocument.CreateNavigator();

return xPathNavigator;

}

private XmlDocument GetXmlDocumentResults()

{

XmlDocument xmlDocument = null;

QueryManager queryManager =

SharedQueryManager.GetInstance(Page, QueryNumber).QueryManager;

Location location = queryManager[0][0];

string query = location.SupplementaryQueries;

if (query.IndexOf(ScopeNewsArticles,

StringComparison.CurrentCultureIgnoreCase) < 0)

{

string userQuery =

queryManager.UserQuery + " " + ScopeNewsArticles;

queryManager.UserQuery = userQuery.Trim();

}

xmlDocument = queryManager.GetResults(queryManager[0]);

return xmlDocument;

}

private void SetCachedUserQuery()

{

var qs = HttpUtility.ParseQueryString

(Page.Request.QueryString.ToString());

if (qs["resultid"] != null)

{

qs.Remove("resultid");

}

HttpRuntime.Cache.Insert(UserQueryCacheKey(this.Page),

qs.ToString(), null,

Cache.NoAbsoluteExpiration,

new TimeSpan(0, 0, _cacheUserQueryTimeMinutes, 0));

}

private void SetCachedResults(XmlDocument xmlDocument)

{

HttpRuntime.Cache.Insert(ResultsCacheKey(this.Page),

xmlDocument, null,

Cache.NoAbsoluteExpiration,

new TimeSpan(0, 0, _cacheUserResultsTimeMinutes, 0));

}

private static string UserQueryCacheKey(Page page)

{

string visitorId = GetVisitorId(page);

string queryCacheKey = String.Format("{0}{1}",

CacheKeyUserQueryString, visitorId);

return queryCacheKey;

}

private static string ResultsCacheKey(Page page)

{

string visitorId = GetVisitorId(page);

string resultsCacheKey = String.Format("{0}{1}",

CacheKeyResultsXmlDocument, visitorId);

return resultsCacheKey;

}

public static string GetCachedUserQuery(Page page)

{

string userQuery =

(string)HttpRuntime.Cache[UserQueryCacheKey(page)];

return userQuery;

}

public static XmlDocument GetCachedResults(Page page)

{

XmlDocument results =

(XmlDocument)HttpRuntime.Cache[ResultsCacheKey(page)];

return results;

}

private static string GetVisitorId(Page page)

{

//TODO: use cookie for anonymous visitors

string id = page.Request.ServerVariables["HTTP_X_FORWARDED_FOR"]

?? page.Request.ServerVariables["REMOTE_ADDR"];

if(SPContext.Current.Web.CurrentUser != null)

{

id = SPContext.Current.Web.CurrentUser.LoginName;

}

return id;

}

}

}

I've used sliding expiration on the cache to allow for the user to spend some time exploring the results. The result set is cached for a short time by default, as this can be quite large. The user query text is, however, small and cached for a long time, allowing the users to at least get their results back after a period of inactivity.

As suggested by

Mikael Svenson, an alternative to caching would be running the query again using the static QueryManager page object to get the result set. This would require using another result key element than the dynamic <id> number to ensure that the current result lookup is not scewed by new results being returned by the search. An example would be using a content type field such as "NewsArticlePermaId" if it exists.

Overriding the

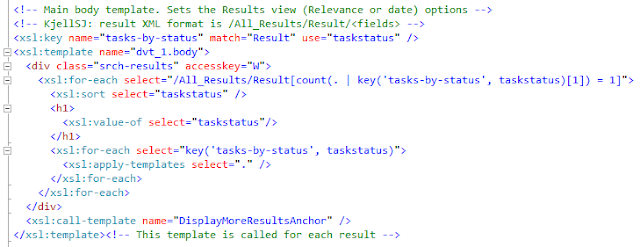

GetXPathNavigator method gets you the cached results that the navigation control needs. In addition, the navigator code needs to know which is the result set id of the current page. This is done by customizing the result XSLT and adding a "resultid" parameter to the $siteUrl variable for each hit.

. . .

<xsl:template match="Result">

<xsl:variable name="id" select="id"/>

<xsl:variable name="currentId" select="concat($IdPrefix,$id)"/>

<xsl:variable name="url" select="url"/>

<xsl:variable name="resultid" select="concat('?resultid=', $id)" />

<xsl:variable name="siteUrl" select="concat($url, $resultid)" />

. . .

The result set navigation control is quite simple, looking up the current result by id and getting the URLs for the previous and next results (if any) and adding the "resultid" to keep the navigation logic going forever.

namespace Puzzlepart.SharePoint.Presentation

{

public class NewsArchiveResultsNavigator : Control

{

public string NewsArchivePageUrl { get; set; }

private string _resultId = null;

private XmlDocument _results = null;

protected override void CreateChildControls()

{

base.CreateChildControls();

_resultId = Page.Request.QueryString["resultid"];

_results = NewsArchiveCoreResultsWebPart.GetCachedResults(this.Page);

if(_results == null || _resultId == null)

{

//render nothing

return;

}

AddResultsNavigationLinks();

}

private void AddResultsNavigationLinks()

{

string prevUrl = GetPreviousResultPageUrl();

var linkPrev = new HyperLink()

{

Text = "<< Previous",

NavigateUrl = prevUrl

};

linkPrev.Enabled = (prevUrl.Length > 0);

Controls.Add(linkPrev);

string resultsUrl = GetSearchResultsPageUrl();

var linkResults = new HyperLink()

{

Text = "Result",

NavigateUrl = resultsUrl

};

Controls.Add(linkResults);

string nextUrl = GetNextResultPageUrl();

var linkNext = new HyperLink()

{

Text = "Next >>",

NavigateUrl = nextUrl

};

linkNext.Enabled = (nextUrl.Length > 0);

Controls.Add(linkNext);

}

private string GetPreviousResultPageUrl()

{

return GetSpecificResultUrl(false);

}

private string GetNextResultPageUrl()

{

return GetSpecificResultUrl(true);

}

private string GetSpecificResultUrl(bool useNextResult)

{

string url = "";

if (_results != null)

{

string xpath =

String.Format("/All_Results/Result[id='{0}']", _resultId);

XPathNavigator xNavigator = _results.CreateNavigator();

XPathNavigator xCurrentNode = xNavigator.SelectSingleNode(xpath);

if (xCurrentNode != null)

{

bool hasNode = false;

if (useNextResult)

hasNode = xCurrentNode.MoveToNext();

else

hasNode = xCurrentNode.MoveToPrevious();

if (hasNode &&

xCurrentNode.LocalName.Equals("Result"))

{

string resultId =

xCurrentNode.SelectSingleNode("id").Value;

string fileUrl =

xCurrentNode.SelectSingleNode("url").Value;

url = String.Format("{0}?resultid={1}",

fileUrl, resultId);

}

}

}

return url;

}

private string GetSearchResultsPageUrl()

{

string url = NewsArchivePageUrl;

string userQuery =

NewsArchiveCoreResultsWebPart.GetCachedUserQuery(this.Page);

if (String.IsNullOrEmpty(userQuery))

{

url = String.Format("{0}?resultid={1}", url, _resultId);

}

else

{

url = String.Format("{0}?{1}&resultid={2}",

url, userQuery, _resultId);

}

return url;

}

}

}

Note how I use the "resultid" URL parameter to discern between normal navigation to a page and result set navigation between pages. If the resultid parameter is not there, then the navigation controls are hidden. The same goes for when there are no cached results. The "result" link could always be visible for as long as the user's query text is cached.

You can also provide this result set exploration capability for all kinds of pages, not just for a specific page layout, by adding the result set navigation control to your master page(s). The result set <id> and <url> elements are there for all kind of pages stored in your SharePoint solution.