These are most likely infrastructure challenges you will encounter outside the native domain:

- Outlook desktop client: access to MSCRM platform services

- Outlook laptop client: desktop + go offline and online

- Exchange: Routing of incoming e-mails to MSCRM users and queues

- SQL Server Reporting Services: access to SRS services for reporting

If you have multiple AD forests without explicit trusts, then the users not in the native domain will get only basic MSCRM functionality; the web client over HTTPS with basic authentication. These users will not be able to use neither the online nor the offline Outlook client (MSCRM desktop / laptop client) as they are not logged on to the domain. Note that such users will not get full reporting functionality with SQL Server Reporting Services (SRS) in this scenario.

If you have multiple Exhange organizations without explicit trusts; then the users not in the native organization (forest) will get only basic send e-mail functionality, the 'e-mail router' will not be able to automatically route incoming e-mails as the mailboxes are not in the native organization. In addition, the router cannot access the native AD domain when not explicitly trusted.

If you have users in an NT4 domain with a one-way trust from the native domain, these users will be able to use both the web and desktop client. They will not be able to use the laptop client as they cannot go off/online, incoming e-mail will not be routed to them, and they will not get full reporting functionality.

Then an overview of how can a multi AD forest and Exchange organization be configured to support full MSCRM functionality:

First of all, forget getting full MSCRM functionality for NT4 domains. Microsoft does not support NT4 anymore, so your're on your own.

The good news is that your users across several AD forests will be able to get the full spectrum of functionality available in MSCRM 3.0. This will just require some configuration of your infrastructure.The most important aspect is that you have to add at least one-way trusts from the native MSCRM domain to the other domains. Trusting requires a LAN, WAN, or VPN connection between your domains. Support for full MSCRM over plain HTTPS is not possible.

The MSCRM Outlook client requires Windows Authentication / Kerberos against the native AD domain and usage of the default security credentials on the client PC. Thus, by adding one-way trusts, your users will be able to use both the MSCRM desktop client and the laptop client. Sending e-mail will of course work, while routing of incoming e-mails to users and queues will require some more configuration (see below).

Note that basic SRS reporting functionality will be available with just one-way trusts. For full reporting functionality, two-way trusts are needed between the AD forests. Alternatively, you need to configure a fixed identity on the clients for accessing the SRS reports (KB article to be published).

MSCRM 3.0 now supports having multiple Exchange servers in your native Exchange 'organization', including Exchange clusters. It is no longer required that you have a single Exchange server handling all incoming internet e-mails for your 'organization', as the functionality of the MSCRM e-mail router has changed in v3.0.

The v1.2 router had this limitation, which made it impossible to have one common MSCRM database in a company with multiple Exchange organizations (mail domains). E.g. I work in a company with several daughter companies and thus mail domains (itera.no, objectware.no, gazette.no, etc). This meant that with v1.2 we could not get full mail functionality in MSCRM. With MSCRM 3.0, we finally can.

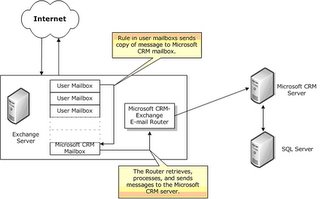

The router no longer inspects all incoming mail messages, but rather a specific MSCRM mailbox.

The inspection of all incoming mails have been replaced by Exchange rules that must be deployed to each Exchange server that contain one or more mailboxes of MSCRM users and queues.

Click to enlarge figure

The Exchange rules, the MSCRM mailbox and the E-mail Router by default require mailboxes to be in the native domain and native 'organization', as the router must be able to access the MSCRM platform services to do its work.

You can deploy the mail routing rules and components to other Exchange organizations, provided that you configure the routing service to use an identity that has access to the MSCRM platform services. This will of course require that you have at least a one-way trust between the AD domains.